Overview

In this guide, we’ll develop an API to identify the top-mentioned features in sales calls.

We’ll process web events data to extract top referrers using the sample web events CSV file.

Our steps include:

- Ingesting the dataset

- Executing queries to get the top 3 referrers

- Exposing the features through an API

Before we begin, we need to create a workspace to store our data and resources, as well as a token to authenticate our CLI.

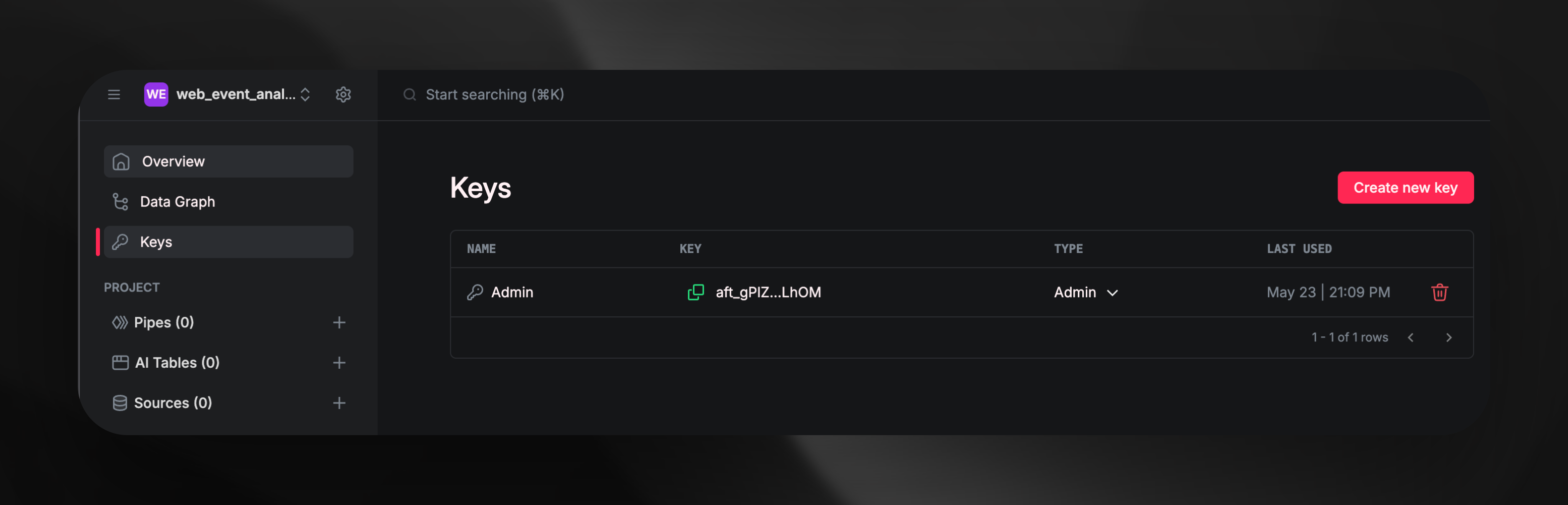

- Go to Airfold and create a new workspace.

- Copy an admin token from the workspace’s Keys page.

The token should look like this: aft_6eab8fcd902e4cbfb63ba174469989cd.Ds1PME5dQsJKosKQWVcZiBSlRFBbmhzIocvHg8KQddV.

Set up the CLI.

The CLI requires Python 3.10 or higher.

- Install the CLI using

pip install airfold-cli.

- Run

af config and paste your token when prompted.

pip install airfold-cli

af config

Let’s generate a source by inferring the schema from a CSV file.

(Replace /path/to/web_events_sample.csv with the actual path):

af source create path/to/web_events_sample.csv .

./sources/web_events_sample.yaml

name: web_events_sample

cols:

event_id: Int64

user_id: Int64

event_type: String

page_url: String

timestamp: DateTime

referrer: String

settings:

engine: MergeTree()

order_by: "`event_id`"

partition_by: toYYYYMM(timestamp)

af push path/to/web_events_sample.yaml

Ingest Data

With the source set up, ingest the CSV data:

af source append web_events_sample path/to/web_events_sample.csv

Further Analysis with Pipes

To identify top referrers in web events data, create an insights.yaml file:

nodes:

- node1:

sql: |

SELECT

referrer,

COUNT() AS num_referrers

FROM web_events_sample

GROUP BY referrer

ORDER BY num_referrers DESC

LIMIT 3

publish: insights

af push path/to/insights.yaml

Query Results

Use the API:

curl --request GET \

--url https://api.airfold.co/v1/pipes/insights.json \

--header 'Authorization: Bearer <use auth token here>'

af pipe query insights --format json

{

"meta": [

{

"name": "referrer",

"type": "String"

},

{

"name": "num_referrers",

"type": "UInt64"

}

],

"data": [

{

"referrer": "twitter.com",

"num_referrers": "24"

},

{

"referrer": "direct",

"num_referrers": "21"

},

{

"referrer": "facebook.com",

"num_referrers": "18"

}

],

"rows": 3,

"rows_before_limit_at_least": 6,

"statistics": {

"elapsed": 0.007243332,

"rows_read": 100,

"bytes_read": 1830

}

}

Next Steps

You’ve successfully ingested, analyzed, and published data using Airfold in a few simple steps! This workflow enables intuitive interaction with data, transforming raw web events data into actionable insights.

Feel free to dive deeper into specific concepts, such as workspaces, sources, and more!